Are speaking tests subjective? We commonly test speaking proficiency with a face-to-face or telephone interview. No two such interviews are ever the same, even if the tester asks only questions from a list. Of course, this is because the answers are free-form language, thus always different. In fact, sticking to a prepared set of questions is not really effective, since it keeps the conversation from developing in a natural way.

So yes, an interview-form speaking test will necessarily be subjective to some degree – the language testing community knows this. The question, however, is whether subjectivity is a necessary evil or something benign and perhaps even advantageous in some ways.

Test Design

In designing any test, the three most important goals are practicality, validity, and reliability. To simplify, practicality means that the time and resources for conducting the test can reasonably be made available; validity means that the test actually does measure what it claims to measure; and reliability means that if a student were to repeat the test, it’ll give the same result repeatedly. From the test taker’s point of view, asking whether a test is “fair” is mainly a question about its reliability.

Needless to say that a professionally conducted language test will not be influenced by personal factors. It will be irrelevant whether testers agree or disagree with the opinions expressed. Even factual errors are to be ignored since they have no bearing on what the test should measure: the use of the language. This kind of subjectivity must be completely absent in rating a test.

Language Testers

Trained language testers use a set of techniques designed to elicit a sample of the examinee’s proficiency. Ideally, the interview will resemble a friendly, casual conversation. Even so, the test is actually a specialized procedure to explore different aspects of the examinee’s use of language. It’s sufficient to allow for a well-grounded judgment on the overall performance.

If the same person takes a test twice within a short time, by different testers, a reliable test will usually give the same result. “Usually,” because performance will always be different on different occasions. The performance gets the rating, not the person.

If different testers rate the same performance (e.g. a recorded interview), a reliable test will give the same result with a high degree of probability. In a gymnastics competition, for example, each judge on the panel gives a score independently. Usually, there is a range of scores, but as long as the range is not too large the judging is competent and fair. The reason for consistency in scoring is that gymnastics judges—and also language testers—are trained and practiced in using clear criteria for making their decisions. They are not relying merely on personal taste. Thankfully, language testers aren’t deciding who gets gold, silver, and bronze – only to which of several broad categories they get assigned.

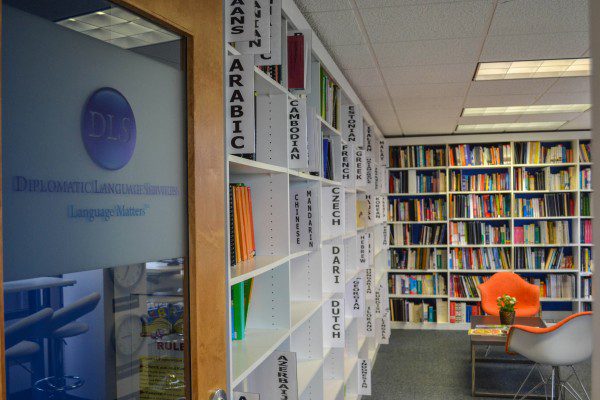

For more DLS, check out other blogs and visit us on Facebook, LinkedIn, Instagram, or Twitter!